Camera Calibration

Extrinsic and Intrinsic Camera Calibration. We use the right camera model, calibration pattern and algorithms for your specific use case.

Read Camera CalibrationPosted on 29 December 2023

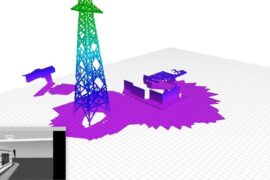

This blog post aims to provide a comprehensive understanding of when and why to employ multi-camera systems in robotic applications. We show the various scenarios where multiple cameras are not just beneficial but necessary. We'll explore how these setups can enhance field of view, resolution, and robustness, and discuss the caveats and technical considerations that come with such setups. Whether it's for mobile robots, pick-and-place tasks, or complex 3D modeling, you'll get to face the question: should I add another camera ?

Two MIR robots having a dual-camera setup for collision avoidance.

We identified 3 clear cases where engineers add one or more extra cameras:

Let’s now take a look at the issues and trade-offs you may encounter when adding one or more cameras to your system.

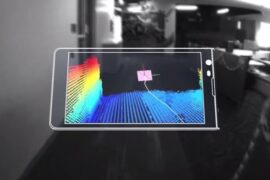

Multi-camera systems can quickly overload a robot's CPU due to the significant amount of data generated. This necessitates a shift towards more efficient processing methods. Utilizing GPU processing can be a more effective solution, as GPUs are better suited to handle large volumes of visual data. NVIDIA created the Isaac ROS ARGUS library to help offload some processing to the GPU. Additionally, selecting cameras that can generate depth maps internally (like the Intel Realsense) using their Visual Processing Units (VPUs) can significantly reduce the processing load on the robot's CPU.

The full frame rate provides more detailed and real-time visual data, which is crucial for navigation tasks. We typically update the state model of the robots environment with every camera frame in order to reduce noise and get better measurements. However, this comes at the cost of increased data processing requirements. Reducing the frame rate can alleviate the processing burden but may compromise the system's responsiveness when used in feedback loops and increase the noise levels on measurements and cost maps of the environment.

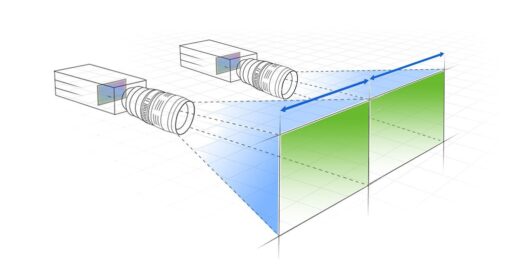

In scenarios where the fields of view of multiple cameras overlap, it's crucial to prevent crosstalk, especially when using structured light 3D cameras. Active and passive stereo don't have this issue and active stereo cameras can actually benefit from crosstalk as the additional IR dot patterns show more texture in the Field of View.

Multi-camera setups inherently increase the complexity of robotic applications. Scaling such systems can be challenging, as it often leads to trade-offs between performance, cost, and complexity. Decisions such as whether the cameras' fields of view should overlap, and the choice between using classical algorithms or neural networks for processing, add layers of complexity to the system design and implementation, leading to uncertain outcomes of certain design decisions and increased testing and trial-and-error cost.

The ANYmal Robot from ANYbotics

We can be quite sure that the vast majority of robotic applications that use multiple cameras will be moving/mobile robots. Just think about the fleet of self-driving vehicles, warehouse robots or agricultural robots. So here’s the must-haves for these applications.

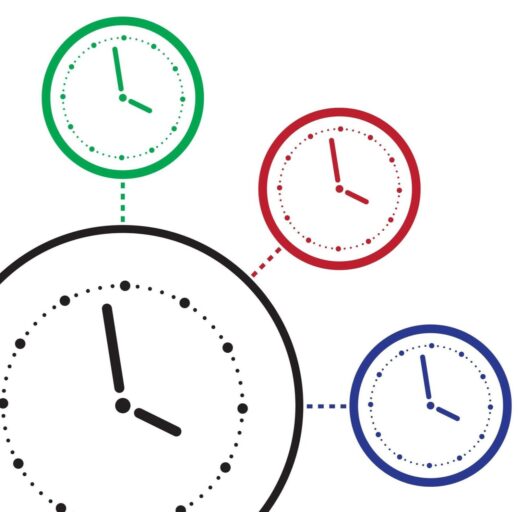

When the object in view moves, or the camera moves, some kind of hardware-based synchronization (by means of an electrical signal/pulse ) is needed between the cameras. The cameras can be configured in two ways: One camera serves as the master, and the others act as slaves, synchronizing their capture times based on the master camera's signals. Alternatively, an external signal generator can be used, where all cameras are configured as slaves to this signal, ensuring synchronized data capture.

Cameras equipped with a global shutter are essential in scenarios where the object or the camera is in motion. Unlike rolling shutters, global shutters capture the entire image frame at once, eliminating distortions caused by movement.

We list here some cameras that are equipped with the essential features discussed earlier, making them ideal for various robotic applications.

There is much more to do to bring multi-camera setups into reality. We didn’t discuss yet calibration techniques, stitching the 2D or 3D images or how to minimize load on the CPU in practice, and many other considerations. If you want to discuss your camera setup with us, feel free to reach out below!

Talk to us here

Extrinsic and Intrinsic Camera Calibration. We use the right camera model, calibration pattern and algorithms for your specific use case.

Read Camera Calibration

Our VIO outperforms what is available in common libraries and hardware implementations. We implement monocular, stereo and multi-camera VIO solutions

Read Visual-Inertial Odometry (VIO)

We have more than a decade of experience with 2D and 3D vision frameworks in C++ and Python.

Read 2D/3D Vision and Perception