Mobile Robot Navigation

We create and/or tune 2D, 3D and 6D motion planners for robots using Open Source and custom developed software.

Read Mobile Robot NavigationPosted on 08 August 2023

The rise of autonomous robots holds the promise of significantly impacting various fields like agriculture, logistics, and defense. While urban areas with well-defined roads present a structured environment for these robots, the real challenge arises when they navigate off-road terrains lacking clear markings. These natural landscapes introduce unique challenges demanding innovative solutions.

The goal is clear - enable robots to navigate off-road terrains as efficiently as they do on structured city roads. Achieving this involves a combination of advanced sensing, real-time processing, and intelligent navigation algorithms. The solution to mastering autonomous off-road navigation rests on three core pillars: real-time collision avoidance, real-time drivable surface detection, and a robust navigation solution considering elevation and surface costs.

In this article, we will explore the challenge of outdoor driving in unmarked conditions and introduce the trio of methodologies central to addressing this challenge.

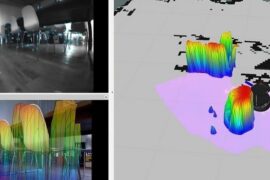

Navigating uncharted terrains demands a robust mechanism to avoid collisions, which hinges on the robot's ability to perceive and understand the world around it. This understanding is anchored in creating a real-time 3D mesh of the environment, serving as a digital twin against which the robot's own 3D model and projected navigation path can be compared. The genesis of this 3D mesh can be traced to various sources - Lidar streams, 2D camera streams, or 3D camera streams, each with its set of advantages and drawbacks.

Structure from a 3D data stream

Creating a coherent and accurate 3D mesh in real-time is a fundamental step towards ensuring safe navigation. The process of data stitching is central to this, and the method employed largely depends on the type of data stream being used. For 2D camera streams, Structure from Motion (SfM) emerges as a viable technique. SfM works by estimating the 3D structure of a scene by analyzing the motion between multiple 2D images. Over time, as more images are captured and analyzed, the 3D structure becomes increasingly accurate.

Structure from Motion exists for many decades, and a modern day alternative was made famous by Tesla in the past year. They are real-time Occupancy Networks, an approach that uses the continuous representation of a classifier network to find the boundary between occupied and unoccupied space, in 3D. It is fed images in real-time and builds up Occupied Volume over time, delivering in practice a 4D occupancy grid. The time aspect also allows to keep track of the Occupancy Flow, which predicts in which direction certain occupied volumes are moving.

On the other hand, when working with Lidar or 3D camera streams, Truncated Signed Distance Function (TSDF) is often utilized for real-time data stitching. TSDF represents a method of fusing multiple 3D scans into a single, cohesive 3D model. It operates by estimating the surface of objects as zero-crossings of a signed distance function. TSDF is particularly efficient in handling noisy data, a common occurrence in Lidar and 3D camera streams. Moreover, TSDF can seamlessly integrate new data into an existing mesh, facilitating real-time updates. However, TSDF may require a more powerful computational backbone given the complexity and volume of data being processed.

There are various algorithms and implementations for both SfM and TSDF. For instance, algorithms like VisualSFM and SfM-Net are popular in the domain of Structure from Motion. There are various Occupancy Networks emerging, for example TPVFormer was inspired on the Tesla architecture. Similarly, in the realm of TSDF, Fast Fusion and KinectFusion are known for their efficiency in handling real-time data stitching.

The stitching of data into a coherent 3D mesh is a pivotal step that bridges the gap between raw sensor data and actionable spatial understanding.

3D Environment Meshes are easy means to check for collisions.

The keystone of ensuring safe navigation through unmarked terrains is a robust real-time collision checker that meticulously analyzes the intersection between the generated 3D mesh representing the environment and the robot's 3D model (the ego body).

This analysis is instrumental in evaluating a predicted navigation path for potential collisions well in advance. Unlike traditional collision detection used in physics simulations, which might calculate reactions post-collision, the focus here is solely on preemptive detection along the planned path to ensure a collision-free trajectory.

The collision checker is tasked with performing a myriad of checks at frequent intervals, say every 1cm, along a predicted path that could span dozens of meters. This necessitates a highly efficient and real-time collision checker capable of swiftly processing the data and providing reliable feedback to the navigation system.

Several real-time collision checker libraries have been developed to cater to such requirements. Libraries like Bullet3, FCL (Flexible Collision Library) and PQP (Proximity Query Package) are notable in this domain. These libraries are designed to perform high-frequency collision checks efficiently, thereby allowing for a timely assessment of the predicted path against the environmental mesh.

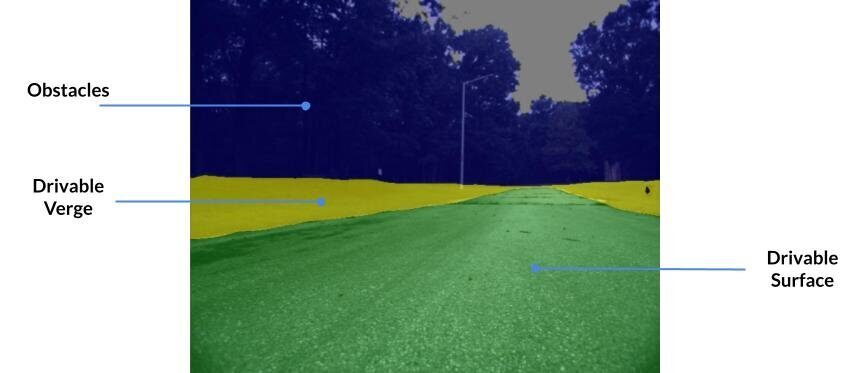

The quest for autonomous off-road navigation is significantly bolstered by the ability to differentiate drivable surfaces from non-drivable ones in real-time. The majority of existing segmentation networks, honed for urban scenarios, find themselves at a loss when road markings fade into the wilderness. This underscores the need for datasets and models tailored to off-road conditions.

Enter the RUGD (Rice University and Georgia Tech off-road Dataset) and RELLIS-3D datasets, a leap toward training networks to understand off-road terrains. The RUGD dataset is centered around the semantic understanding of unstructured outdoor environments, with video sequences captured from an onboard camera on a mobile robot platform, making it a treasure trove for off-road autonomous navigation. Conversely, the RELLIS-3D dataset, collected in an off-road environment, encompasses 13,556 LiDAR scans and 6,235 images, presenting a multimodal dataset that challenges existing algorithms due to class imbalance and environmental topography.

Luckily, lots of Computer Vision research has focused on image segmentation, where the network tries to determine which pixels belong together and to which class of surface/object they belong to. Surface segmentation is thus a special case for image segmentation and a classical workflow can be choosen to set this network up:

The final pillar of autonomous off-road navigation centers around intelligently planning a path across unmarked terrains. This involves synthesizing the insights obtained from real-time 3D meshing and drivable surface detection into a coherent navigation strategy. At the core of this strategy is finding the smoothest, lowest cost path in a continuously updating cost map. This intelligent path planning will guide the robot's journey through the terrain, ensuring real-time responsiveness to the evolving landscape.

A small robot finding the way to traverse a bridge.

The Hybrid A* algorithm is particularly suited for off-road navigation, extending the classical A* algorithm into a continuous domain to accommodate the kinematics of the navigating vehicle. This extension allows for smoother path planning in environments with complex topography and varying drivability.

In practice, the Hybrid A* algorithm uses cost maps from the drivable surface data obtained from segmentation networks and the elevation data from the 3D mesh. These cost maps reflect the “cost” associated with traversing through each point in the environment ("Traversability"), taking into account factors like terrain roughness, slope, and the presence of obstacles.

The algorithm continuously searches the shortest/best paths in a graph of options with some smart heuristics to avoid having to expand trees that are unlikely to succeed. The traversability (the cost per cell) must be given as an input.

When the real-time collision checker signals a potential collision along the planned path, the Hybrid A* algorithm can swiftly replan the route, even bringing the robot to a full stop if necessary before devising a new path to circumvent the detected obstacle.

The Hybrid A* algorithm's flexibility has led to numerous implementations and papers focusing on enhancing its efficacy for off-road navigation. Various versions, like the Roadmap Hybrid A* and Traversability Hybrid A*, have been proposed to tackle specific challenges associated with off-road navigation, demonstrating the algorithm's adaptability to different navigation scenarios and vehicle kinematics.

Apart from Hybrid A*, D* Lite and RRT* (Rapidly-exploring Random Trees Star) are other algorithms known for their efficacy in off-road autonomous navigation. D* Lite is noted for its efficiency in dynamic environments, while RRT* is adept at exploring and planning paths in high-dimensional spaces. Both algorithms can also be tailored to incorporate real-time drivable surface and elevation data, forming a cohesive navigation solution apt for off-road conditions.

The journey through the details of autonomous off-road navigation reveals a dynamic field, constantly advancing through innovations in both software algorithms and hardware technologies.

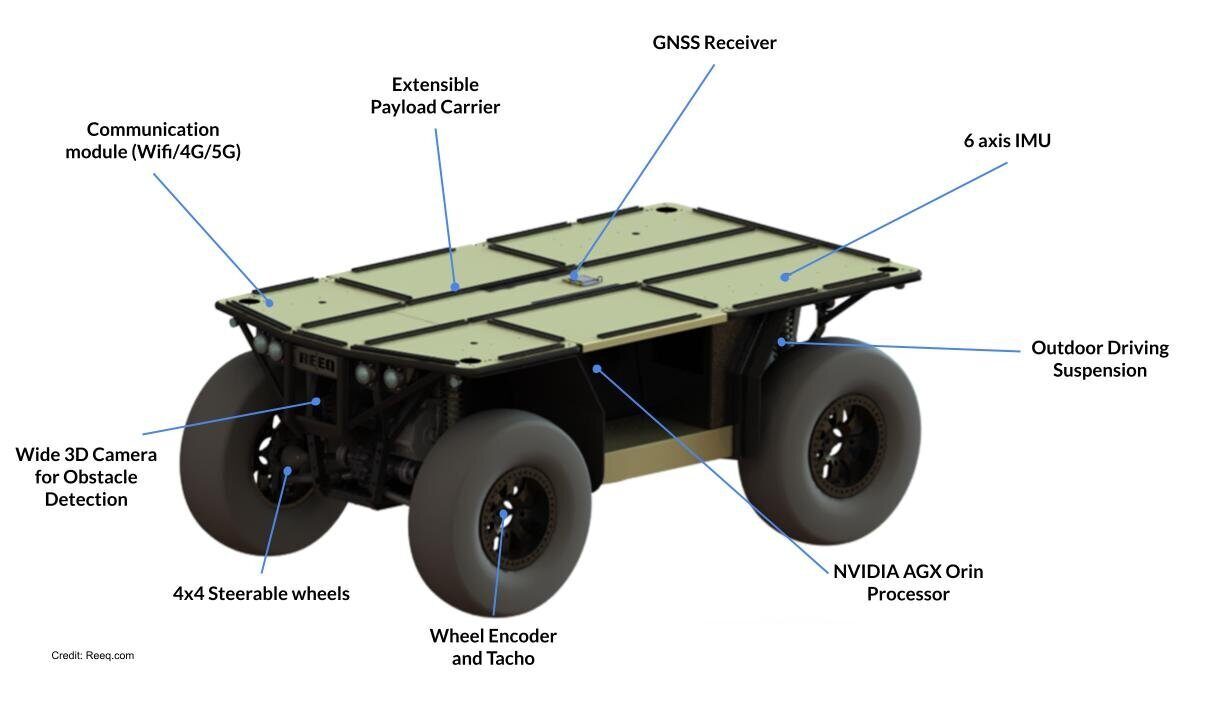

A significant catalyst in this progress is the advent of cost-effective, high-performance computing platforms. These platforms have lowered the cost of entry, enabling a broader spectrum of research and commercial initiatives. This affordability accelerates the iterative design process, allowing researchers and companies to quickly refine their Unmanned Ground Vehicles designs to better navigate off-road terrains.

Additionally, advancements in sensor technologies like Lidar, cameras, and 3D cameras have enriched the data available for real-time decision-making. These advancements, coupled with the proliferation of open-source software libraries dedicated to autonomous navigation, have fostered a collaborative environment pushing the envelope towards more reliable off-road autonomous navigation solutions.

We create and/or tune 2D, 3D and 6D motion planners for robots using Open Source and custom developed software.

Read Mobile Robot Navigation

We create global and local planners that take into account the collision of the robot body with the environment.

Read 2D/3D Collision Avoidance

We develop pure ROS 2 applications, as well as hybrid setups for ROS 1 and ROS 2.

Read ROS 2 Integration