Addressing Safety Concerns - Collisions

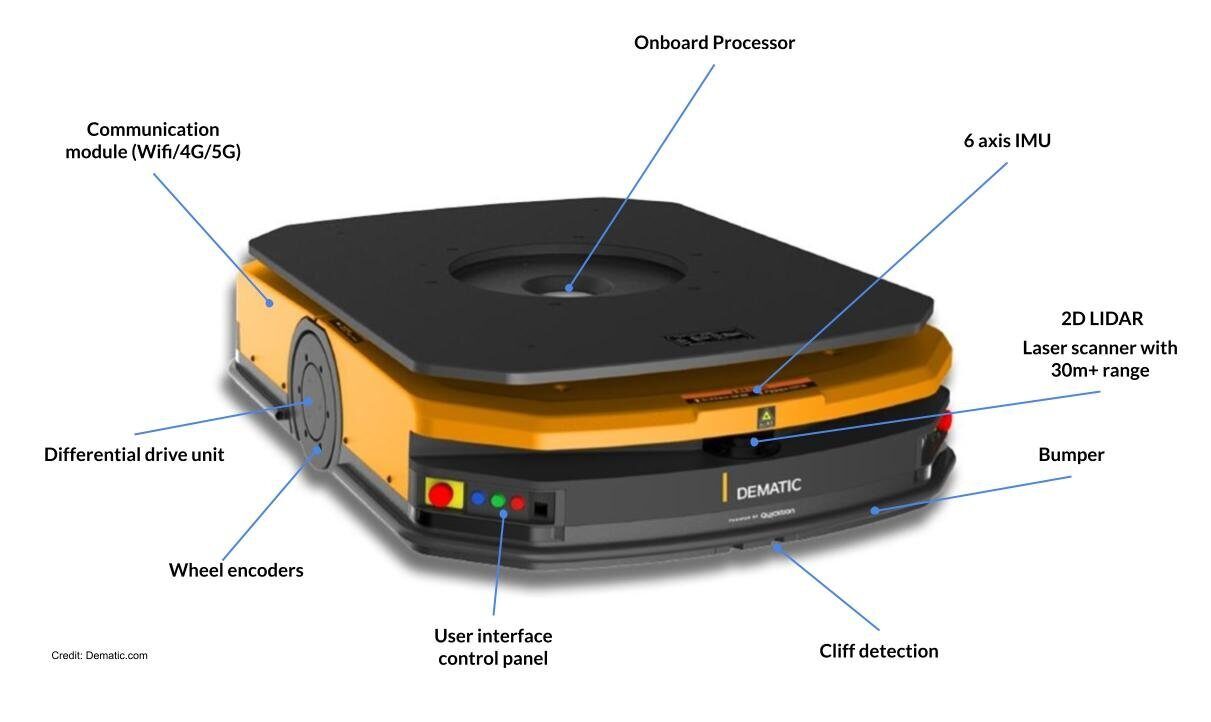

One of the most important considerations when creating a mobile robot is addressing safety concerns, particularly when it comes to collisions. For indoor mobile robots, you will want to select sensors that have a sufficiently long range and wide field of view. The three most common sensors used to avoid collisions are LIDAR, 3D cameras and Sonar, where the former is the most common with mid-size to large robots, the second for small to mid-size robots and the latter mainly serves to detect glass walls if they are present in that environment.

There is currently a fight going on between LIDAR and 3D cameras, the former led by traditional industrial sensor providers like Sick, Hokuyo and many smaller competitors, while the 3D camera battle is led by Intel, with its Realsense product lineup. 3D cameras intend to dethrone LIDAR for all mobile robot sizes, claiming that the added range of LIDAR is not necessary for any indoor application.