Robot/Camera Calibration

Extrinsic and Intrinsic Camera Calibration. We use the right camera model, calibration pattern and algorithms for your specific use case.

Read Robot/Camera CalibrationPosted on 04 October 2023

For every mobile robot, the integration of reliable sensors with an efficient computing platform is crucial for achieving modern-day capabilities. The ZED X stereo camera, released by Stereolabs in 2023 and specifically designed for compatibility with NVIDIA Jetson, offers a compelling solution for both indoor and outdoor robotic applications. Building on our previous explorations of NVIDIA Isaac ROS, this guide aims to provide a thorough understanding of how the ZED X camera can be effectively utilized in various scenarios.

The ZED X and ZED X mini stereo camera lineup for mobile robotics applications.

In the early days of 3D vision, Stereolabs launched its ZED 3D camera in 2015, around the same time Intel's RealSense cameras were becoming popular. Initially, ZED cameras worked with Intel systems, which Intel hoped to dominate with its third-generation RealSense D400 series (released in January 2018). However, Intel decided not to support its RealSense cameras on ARM/Jetson platforms, creating an opening for Stereolabs.

Intel’s move to focus exclusively on its x86 architecture made sense strategically, as it strengthened their position in systems that already relied on Intel processors. But this left a gap in the market for ARM/Jetson compatibility, which Stereolabs quickly recognized and filled. By ensuring the ZED camera worked seamlessly with NVIDIA’s ARM-based Jetson platform, Stereolabs provided an alternative for users who needed flexibility beyond Intel’s ecosystem. This decision allowed Stereolabs to turn Intel’s limitations into an opportunity, carving out a unique place in the industry.

Stereolabs’ focus on ARM compatibility also aligned perfectly with NVIDIA’s expanding vision. NVIDIA was evolving from its traditional role in GPUs to driving advancements in robotics with its Isaac SDK and Jetson hardware. Their commitment to combining 2D/3D vision with GPU-accelerated image processing created a space that Intel had not yet explored.

This shared emphasis on innovation and adaptability made NVIDIA and Stereolabs natural partners. By the time Stereolabs launched the ZED X camera, they had fully embraced this partnership, dropping support for Intel platforms entirely.

Oh irony!

The exclusive ZED X-NVIDIA combo has made a bet on a few high-profile applications in robotics. We have highlighted here the three most dominantly supported applications for the ZED X.

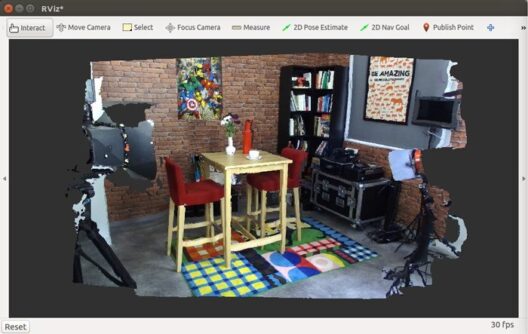

One of the principal features of the ZED X camera is its ability to produce depth maps, even in indoor lighting conditions. When coupled with NVIDIA Isaac ROS capabilities, this becomes a useful tool for indoor navigation. Popular applications are mapping out complex environments, navigation, avoiding obstacles, or recognizing specific objects or areas of interest. The Isaac ROS SDK is officially supporting the ZED camera lineup for their indoor applications.

The rugged design of the ZED X camera, complete with its IP66-rated enclosure, makes it suitable for outdoor applications. For example, agricultural drones capturing real-time data on crop health or automated mining and construction robots. NVIDIA does not offer many tools in their Isaac SDK for outdoor applications, and you'll need to build on the ZED SDK for these outdoor applications, which allows for GNSS integration, object detection and recognition (more on that below) and 3D mesh building.

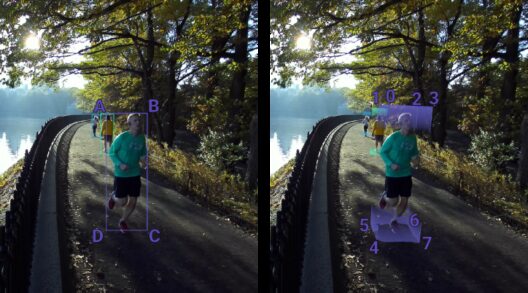

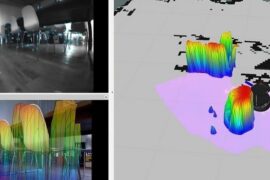

The ZED X camera comes with an SDK with a 3D object detection pipeline. It uses the NVIDIA Jetson GPU to run neural networks for real-time object detection and recognition. It offers built-in detectors for persons, vehicles, bags, animals, electronics or fruit. If that does not cut your needs, it is possible to create a Custom Detector using the NVIDIA TensorRT library.

As intriguing as these applications are, you might be wondering, "How do I actually get started?" The next chapter will delve into the essential building blocks you'll need to bring these applications to life. From setting up the development environment to choosing the right SDKs and hardware, we'll guide you through the foundational steps to ensure a successful project.

Embarking on a project that leverages the ZED X camera and NVIDIA Jetson platforms requires a well-thought-out architecture. In this chapter, we'll outline the key components you'll need to set up your development environment and target hardware and software runtime.

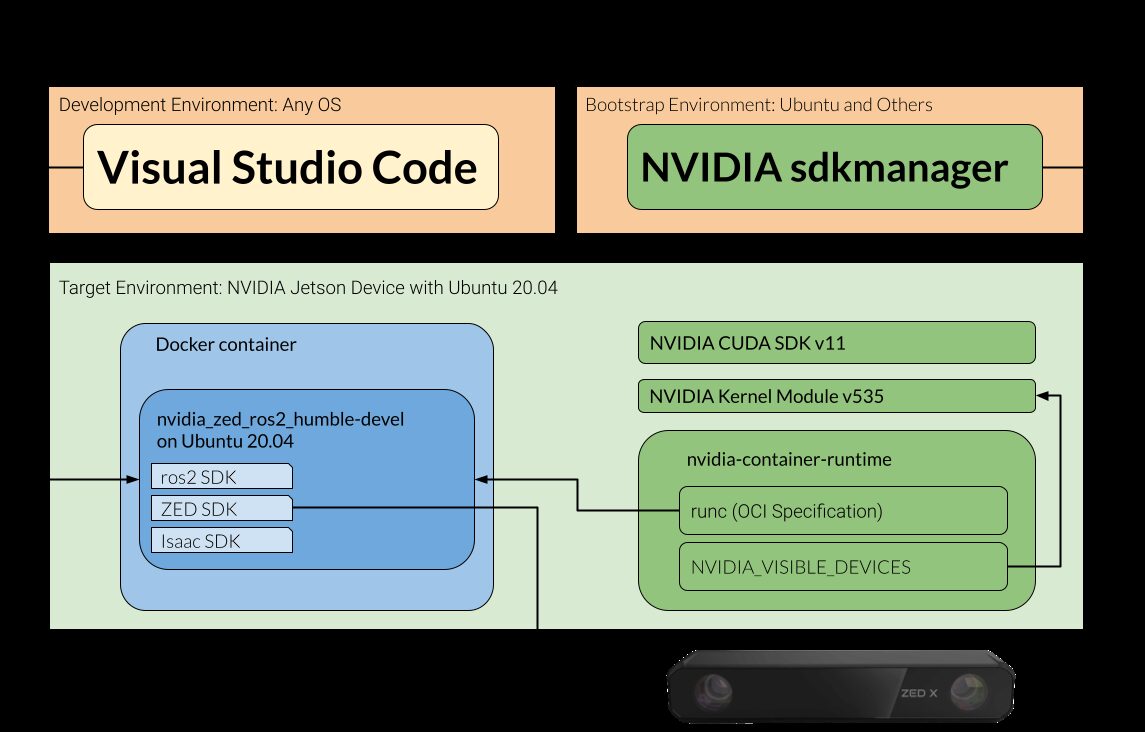

We outlined in this diagram the software and components of a typical setup. You can develop code from any Operating System, using a remote connection to a target (any Jetson device). But you do need to setup this target device with the NVIDIA sdkmanager. Most developers nowadays run Docker instances on the Jetson target in order to keep the complex dependencies equal among all their setups.

A Typical ZED X + NVIDIA setup.

Here’s the breakdown:

ZED SDK: The ZED SDK v4 is your primary tool for interacting with the ZED X camera. It provides APIs for capturing images, generating depth maps, and managing the camera devices.

ROS Humble: We recommend to stick to ROS 2 and the Humble release, as it is best supported on NVIDIA hardware and is a requirement if you want to add Isaac ROS later-on, since the Isaac SDK is not required.

Docker: You will run Docker containers both on your target hardware and development environment, even if these are already running the Ubuntu 20.04 Operating System.

NVIDIA SDK Manager: Only required to bootstrap your Jetson device (it will install the correct OS and libraries). Once the bootstrapping is done, you don’t need the SDK manager anymore and any developer can use the Docker containers instead, on any supported Docker OS.

We recommend to get started with the NVIDIA Jetson AGX Orin 64GB Developer Kit, with the mandatory GMSL2 Capture Card, which can only run 2 ZED X devices (since each ZED devices has 2 cameras, the maximum is 4 cameras). It offers you 64GB RAM on 12 CPU Cores, 275 TOPS of AI Performance and a 2048 Cuda cores. The advantage is that it can also serve as your build environment, since it’s sufficiently powerful to compile applications and it avoids setting up a cross compiler environment on your regular Linux workstation.

The NVIDIA Jetson AGX Orin Developer Kit

Once you are familiar and start running first applications, you can trial field test on the ZED Box Orin NX 16GB, which also includes a GMSL2 port. It is a bit cheaper and lighter than the Developer kit and offers 16GB of RAM, on an 8 core CPU, 100TOPS AI performance, 1024 Cuda cores and 32 Tensor cores. Since NVIDIA is taking care of the bootstrapping, you can also opt for (cheaper) non-ZED field devices.

The ZED Box Orin NX 16GB

The ZED X camera offers a variety of integration options that cater to different development needs. In this chapter, we'll delve into some of the most popular frameworks in the mobile robotics community: ZED ROS2, ZED PCL, ZED Docker, and ZED OpenCV.

The ZED ROS2 integration allows you to use ZED stereo cameras with ROS2, providing access to a wide range of data including rectified/unrectified images, depth data, colored 3D point clouds, position and mapping with GNSS data fusion. Since we recommend using ROS 2 Humble, care must be taken to not use the Docker containers of the ZED ROS 2 wrapper but build instead on top of ROS Humble in an Ubuntu 20.04 Docker container.

The ZED ROS2 integration offers a comprehensive solution for those looking to leverage the capabilities of ZED cameras in a ROS2 environment.

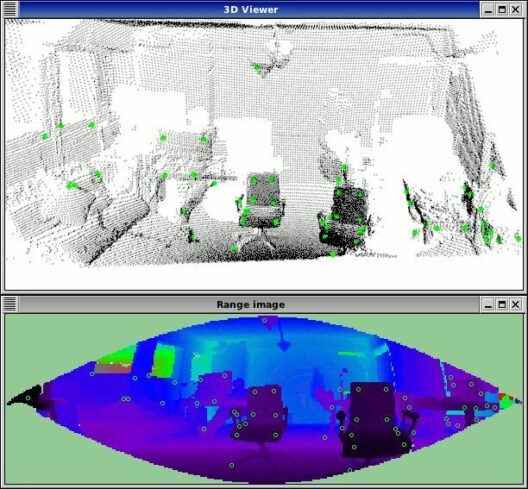

The ZED PCL integration serves as an example program that demonstrates how to acquire and display a 3D point cloud using the Point Cloud Library (PCL). Unlike many PCL applications that rely on ROS for data acquisition and processing, this example specifically shows how to achieve this without using ROS.

This ZED PCL integration offers an easy approach to 3D point cloud acquisition and display, especially for those who prefer not to use ROS.

The ZED Docker integration provides a virtualized environment for running the ZED SDK, isolating it from the host machine. This is particularly useful for developers who need to manage lots of dependencies and want to start from a tried-and-tested target environment.

For the NVIDIA Jetson workflow, both the Development and the Target run Docker containers, even if the host OS is a supported Ubuntu version. The dependencies are too intricate and Docker guarantees a reproducible development and runtime environment. Both NVIDIA and Stereolabs offer Docker images in order to offer stable environments.

The ZED OpenCV integration serves as an example program that demonstrates how to capture images and depth maps from the ZED camera and convert them into OpenCV-compatible formats. This is particularly useful for developers who are already familiar with OpenCV and want to leverage its capabilities for image and depth map processing.

This ZED OpenCV integration offers a straightforward way to integrate ZED cameras with OpenCV, especially for those who prefer not to use ROS.

With this, we've covered the popular framework integrations for the ZED X camera. Each has its own set of capabilities and limitations, making it crucial to choose the one that aligns best with your project's specific needs.

Hopefully you became a bit wiser upon the ins and outs of the using the ZED X on an NVIDIA target. Intermodalics offers professional support on this and other integrations, don’t hesitate to reach out !

Order a Robot Vision capability

Extrinsic and Intrinsic Camera Calibration. We use the right camera model, calibration pattern and algorithms for your specific use case.

Read Robot/Camera Calibration

Our VIO outperforms what is available in common libraries and hardware implementations. We implement monocular, stereo and multi-camera VIO solutions

Read Visual-Inertial Odometry (VIO)

We create global and local planners that take into account the collision of the robot body with the environment.

Read 2D/3D Collision Avoidance