NVIDIA Isaac ROS

We integrate the NVIDIA Isaac SDK into ROS 2 applications using the officially supported Isaac ROS stack.

Read NVIDIA Isaac ROSPosted on 05 January 2024

At ROSCon 2023, NVIDIA announced the newest Isaac ROS software release, Isaac ROS 2.0. With over two years of dedicated development in the rearview mirror, NVIDIA continues to propel the acceleration of robotics and AI applications on the NVIDIA Jetson platform.

NVIDIA Isaac ROS is a powerful and versatile robotics platform that enables developers to quickly create, deploy, and test robotics applications. It is the result of NVIDIA’s work with the open-source Robot Operating System (ROS) Humble release. Isaac ROS is designed to make it easier for developers to create and simulate robots that can interact with their environment, recognize objects and navigate. Also, NVIDIA is putting a lot of effort to port robotic algorithms to the GPU, allowing for real-time, on-robot data processing and control.

In this article, we’ll give you an overview of Isaac ROS, exploring its features and benefits, and provide a summary of the platform in under 5 minutes !

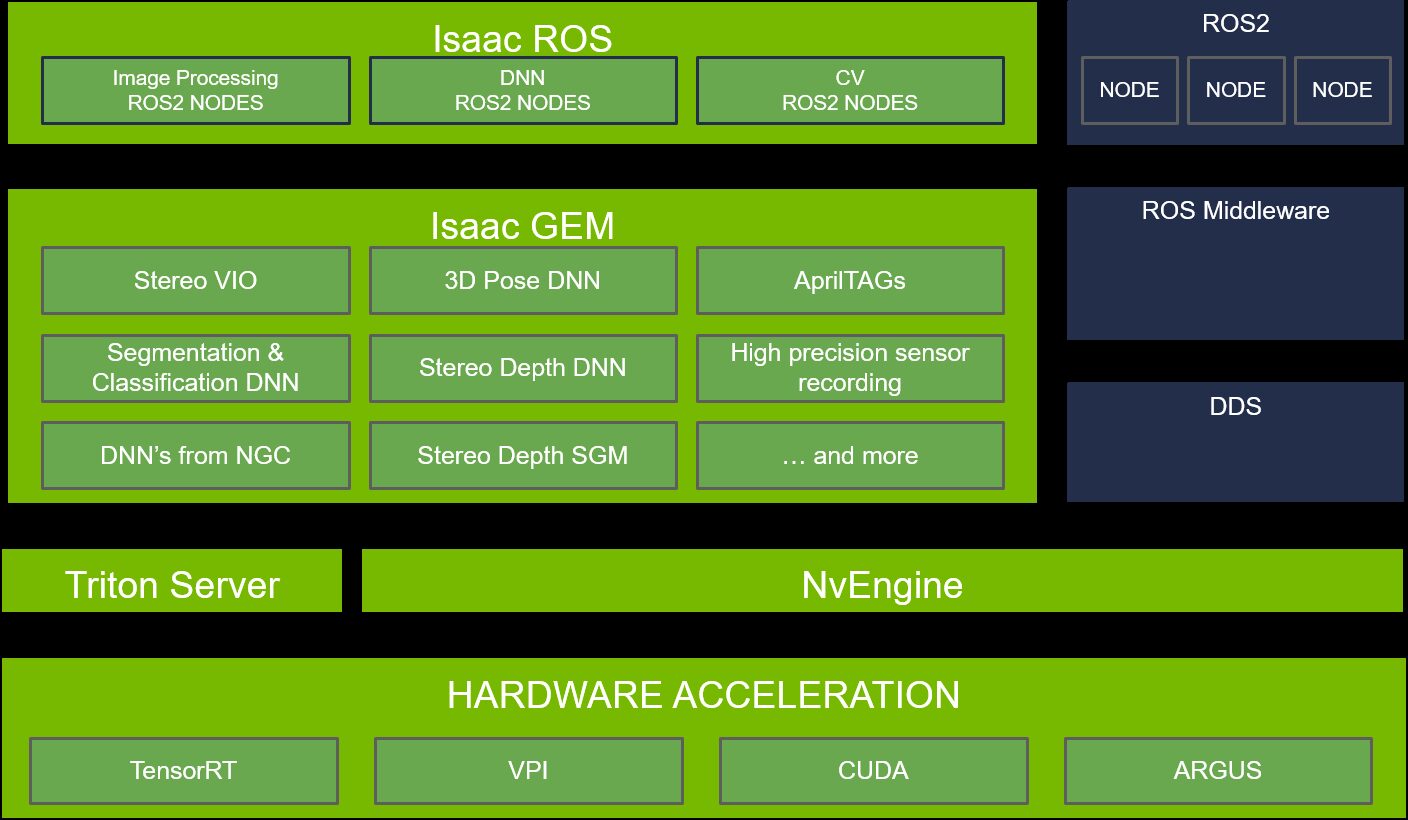

Isaac ROS consists of traditional ROS2 nodes and Isaac specific, GPU accelerated packages (GEMs) for hardware-accelerated performance. It fully replaces the older NVIDIA Isaac SDK releases.

Isaac ROS is:

Now let’s dive into the most important parts of this software stack.

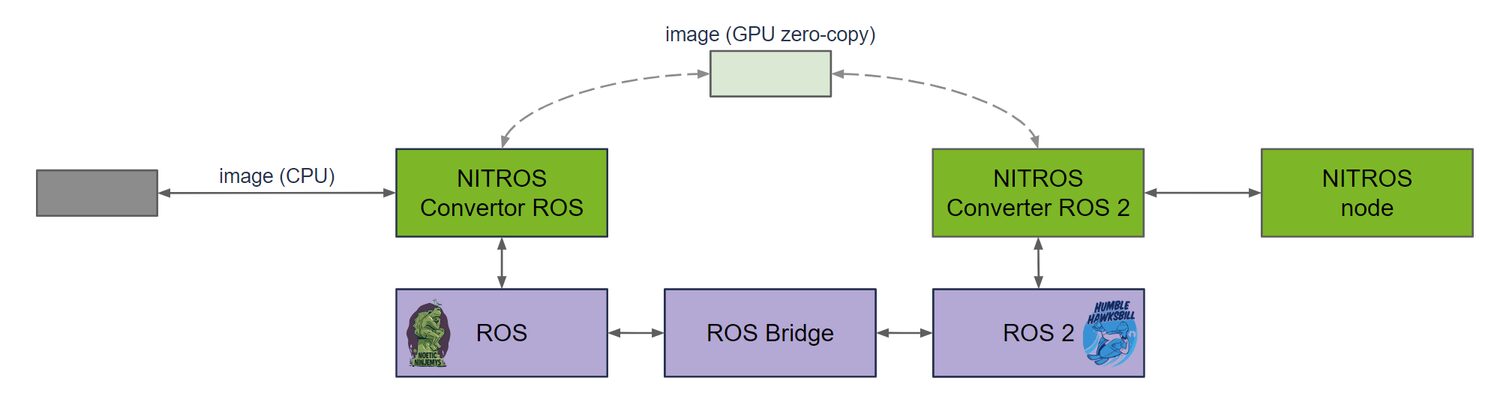

ROS2 Humble introduces new hardware-acceleration features, including type adaptation and type negotiation, that significantly increase performance for developers seeking to incorporate AI/machine learning and computer vision functionality into their ROS-based applications. This makes the hardware accelerator zero-copy possible, as long as the nodes live in a single process, similar to the ROS 1 nodelets. This eliminates CPU copy overhead and uses the full potential of the underlying GPU. The NVIDIA implementation of type adaption and negotiation are called NITROS (NVIDIA Isaac Transport for ROS). NVIDIA lists these assumptions:

NVIDIA introduced in the 2.0 release an Isaac ROS NITROS bridge which allows ROS applications that use the original ROS Bridge between ROS 1 and ROS 2 nodes to make use of the GPU zero-copy as well.

This ROS2 package performs visual simultaneous localization and mapping (VSLAM) and estimates visual inertial odometry (VIO) using the Isaac cuVSLAM GPU-accelerated library. It takes in a pair of stereo images and camera parameters to publish the current position of the camera relative to its start position. cuVSLAM is not the best performer on the KITTI benchmark, but gives a good, GPU accelerated, starting point for exploratory purposes.

You may learn more about VSLAM on our VSLAM and Navigation page or visit our in-depth review of the cuVSLAM ROS package.

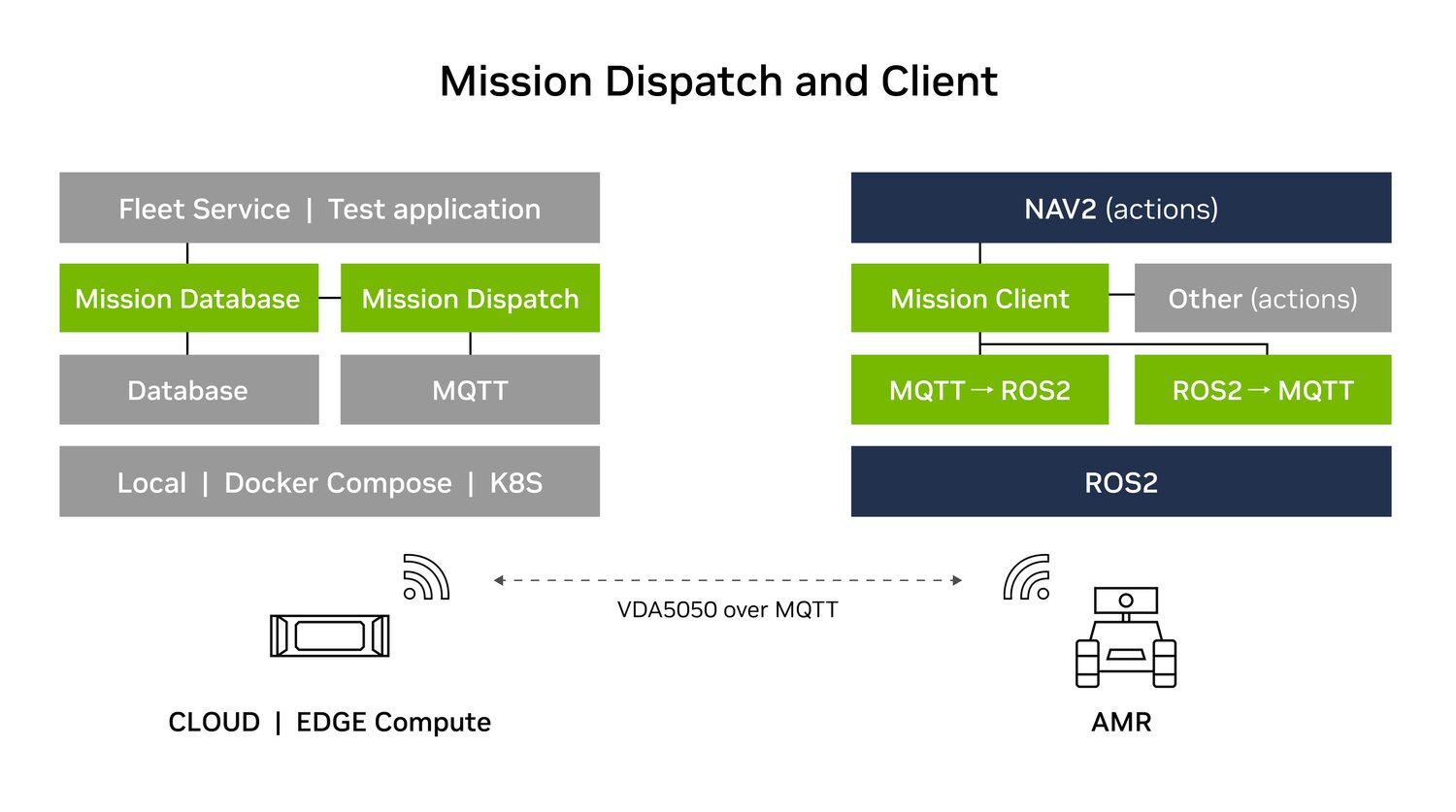

This open-source CPU-only package allows you to assign and monitor tasks from a fleet management system, which uses MQTT, to the robot, which uses ROS2. Mission Dispatch is a cloud-native microservice that can be integrated as part of larger fleet management systems, more specifically, it is an implementation of the VDA5050 standard for AGVs and mobile robots.

Isaac ROS contains six built-in Deep Neural Network (DNN) inference models:

We highlight three repositories that we expect to be used in many robot setups:

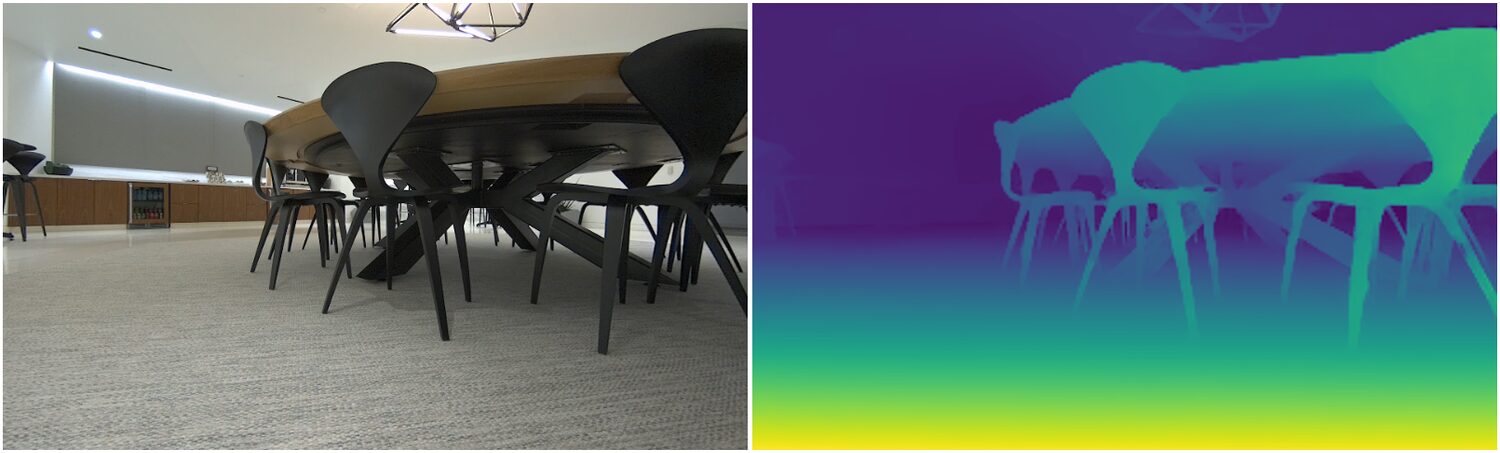

There is a race going on between stereo camera providers to create the best depth images in any given environment. NVIDIA has contributed by providing the ESS Stereo Disparity Estimation DNN and did a major model update in the Isaac ROS 2.0 release. It takes 2 stereo image pairs, normalized by mean 0.5 and standard deviation 0.5 and each of size 576 by 960 pixels. The output will be a depth map and a confidence map with the same resolution. There is also a Light ESS network that works on half the pixel resolution (so a quarter of the size).

The ESS model was trained on 600,000 synthetically generated stereo images in rendered scenes from Omniverse, as well as about 25,000 real sensor frames collected using HAWK stereo cameras.

NVIDIA publishes benchmarks of this model, showing the FPS (ranging from 28FPS on AGX Xavier to 85FPS on AGX Orin) and measuring the % of bad pixels (ie bp2% and bp4%) in the disparity map, deviating from the ground truth disparity map.

Free space, near and far objects

Proximity segmentation can be used to determine whether an obstacle is within a proximity field and to avoid collisions with obstacles during navigation. Proximity segmentation predicts free space from the ground plane, eliminating the need to perform ground plane removal from the segmentation image.

This is used in turn to produce a 3D occupancy grid that indicates free space in the neighborhood of the robot. This camera-based solution offers a number of appealing advantages over traditional 360° lidar occupancy scanning, including better detection of low-profile obstacles.

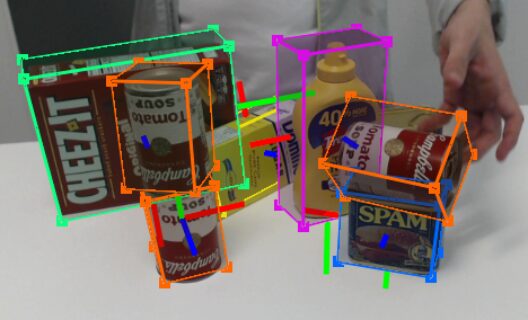

DOPE works on pre-trained, known objects

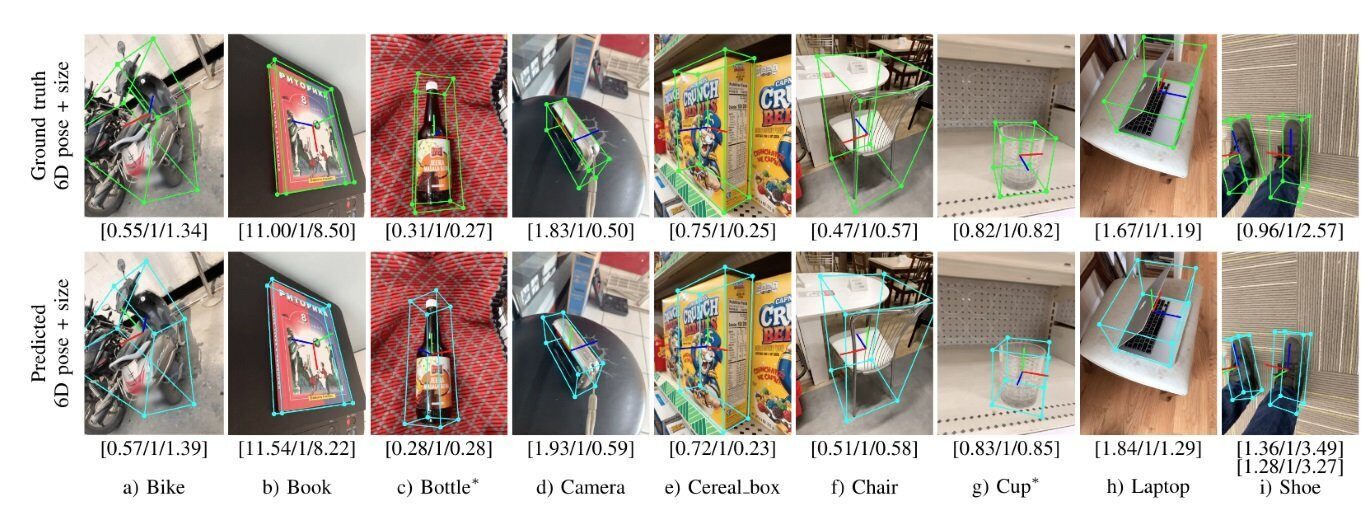

Pose Estimation means calculating the 3D position and 3D orientation of an object by analyzing a (single) 2D image or 3D point cloud.

Using a deep learned pose estimation model and a 2D camera, there are two packages that can estimate the pose of a target object.

CenterPose works on known categories of unknown objects.

The NVIDIA GPU Cloud (NGC) hosts a catalog of (encrypted) Deep Learning pre-trained models that can be converted to the Isaac ROS stack. The NGC platform includes pre-trained models and workflows for a variety of deep learning tasks, such as image recognition, natural language processing, and speech recognition.

TAO is a set of tools and workflows designed to help developers adapt pre-trained deep learning models to their specific use cases. This is called Transfer Learning. The TAO toolkit decrypts NGC models, does data preparation, model adaptation, and optimization for deployment on NVIDIA hardware. By using the TAO toolkit, developers can quickly and easily customize pre-trained models for their specific needs, without having to start from scratch with their own training data.

Hardware-accelerated packages for compressed video data recording and playback. Video data collection is an important part of training AI perception models. The performance of these new GEMs on the NVIDIA Jetson AGX Orin platform can reduce the image data footprint by ~10x.

While the NVIDIA Isaac ROS software release includes a range of features and packages that are useful for building autonomous mobile robots, there are still some capabilities that are not included and may need to be sourced from elsewhere. Here are a few examples:

The Isaac ROS software is a set of software tools and packages designed to run on top of the NVIDIA Jetson CPU/GPU board. Developers will still need to source and build the actual robot hardware, including things like the chassis, wheels, motors, sensors, cameras and other components. Take a look at our previous blog post A Guide to Creating a Mobile Robot for Indoor Transportation for some tips and tricks there.

NVIDIA is providing 2 reference designs, one is the Carter robot and one is the Kaya robot.

The NVIDIA Carter 2.0 robot

While the Isaac ROS software includes some packages related to navigation, such as Free Space Segmentation and VSLAM, the NVIDIA Isaac Navigation GEM has not been used in the ROS stack. In addition, the Isaac SDK depends on LIDAR for tasks like path planning and obstacle avoidance.

While the Isaac ROS software includes some packages for monitoring and managing the robot, developers may need to build custom user interfaces and dashboards for specific applications. The most popular visualization and interaction dashboards today are RViz, Robot Web Tools and Foxglove Studio.

While the Isaac ROS software includes some packages for training and deploying machine learning models, developers may need to source or develop their own models or use the NVIDIA GPU Cloud (NGC) for specific tasks or applications.

Okay, it maybe took a bit more than five minutes, but this is a fully packed release ;-)

Further reading:

More questions ?

Get in touch with us on our Support page and we’ll gladly assist you !

Order a robot capability

We integrate the NVIDIA Isaac SDK into ROS 2 applications using the officially supported Isaac ROS stack.

Read NVIDIA Isaac ROS

We create and/or tune 2D, 3D and 6D motion planners for robots using Open Source and custom developed software.

Read Mobile Robot Navigation

We integrate the state of the art software libraries from the NVIDIA Developer and NVlabs software repositories.

Read NVIDIA Developer and NVlabs