Accuracy and Performance

cuVSLAM is one of the few existing pure GPU VSLAM implementations with a low translation and rotational error as measured on the KITTI Visual Odometry / SLAM Evaluation 2012 public data set. It outperforms ORB-SLAM2, a popular open-source SLAM library, in terms of both translation and rotation errors, but underperforms SOFT-SLAM and SOFT2, the current leaders in the KITTI benchmark. SOFT however has only published Matlab code, which runs on CPU, and has taken advantage of planar movements of the dataset, making it unsuitable for hand-held or 3D VSLAM.

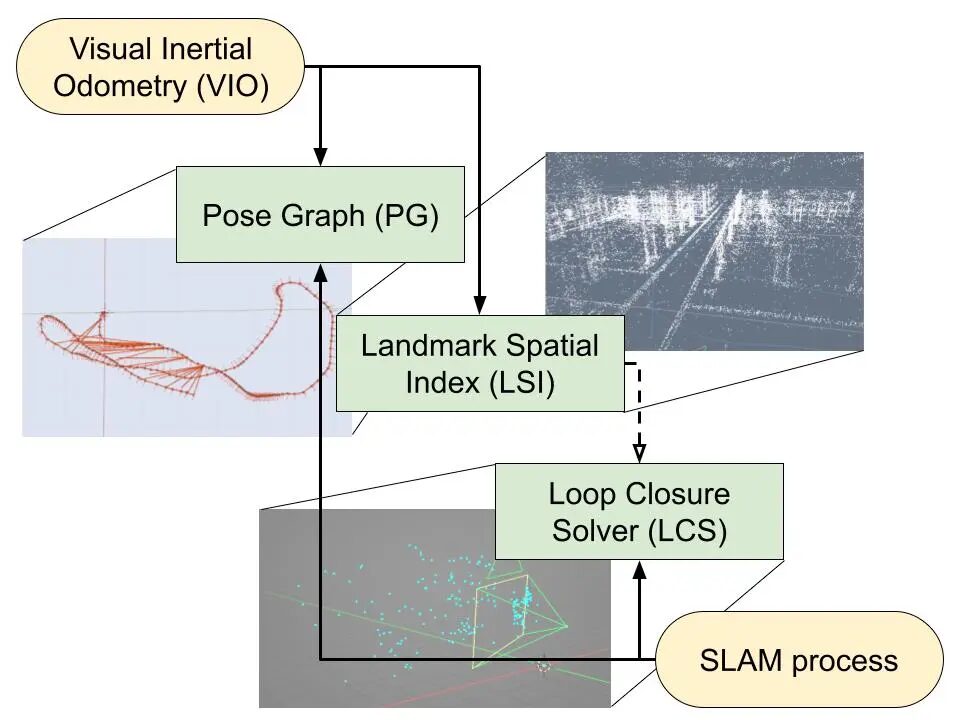

In addition to standard benchmarks, NVIDIA tests loop closure for VSLAM on sequences of over 1000 meters, covering both indoor and outdoor scenes. This ensures that the system can handle a wide variety of environments and conditions.

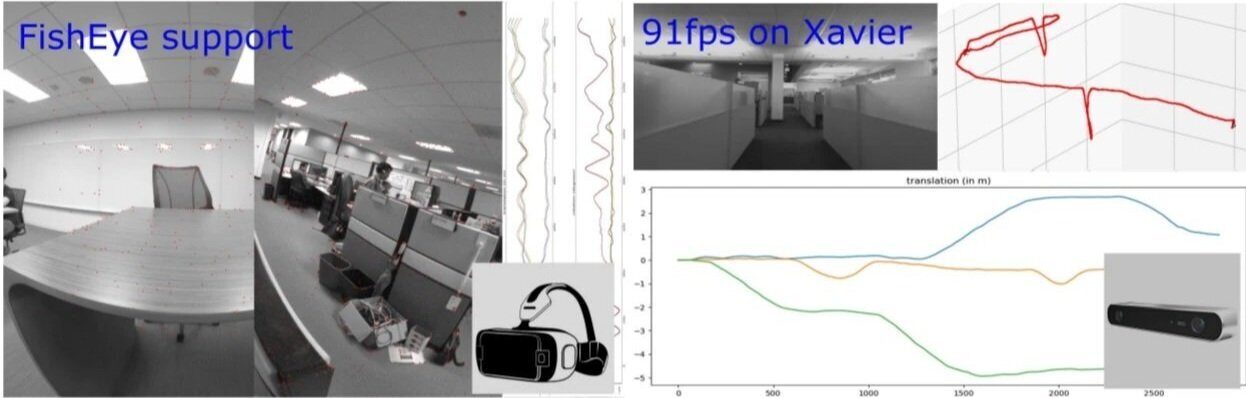

Especially the run-time performance of the cuVSLAM Node is impressive across different platforms. For instance, on an AGX Orin platform, it achieves 232 frames per second (fps) at 720p resolution. On an x86_64 platform with an RTX 4060 Ti, it reaches 386 fps. While these are not your typical robot hardware architectures, it achieves 116fps on the more appropriate Orin Nano 8G, which is far beyond what your typical robot requires.